For years, Dell Technologies has been known as a hardware leader—delivering rock-solid servers, reliable storage, and enterprise-grade infrastructure. But in 2025, something has shifted.

At NVIDIA’s GTC 2025 and across its broader strategy, Dell made one thing very clear: the future of the company isn’t just about hardware anymore—it’s about building a full-fledged AI ecosystem.

This isn’t marketing fluff or surface-level integrations. Dell is reengineering how enterprises build, deploy, and scale artificial intelligence.

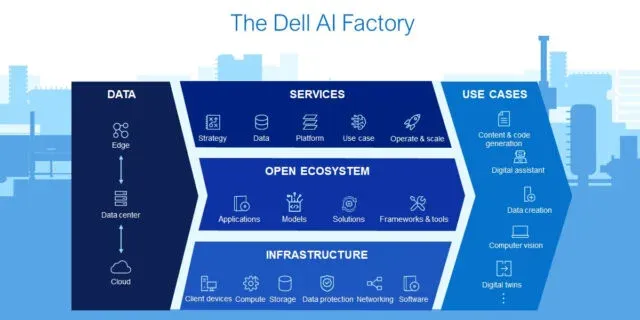

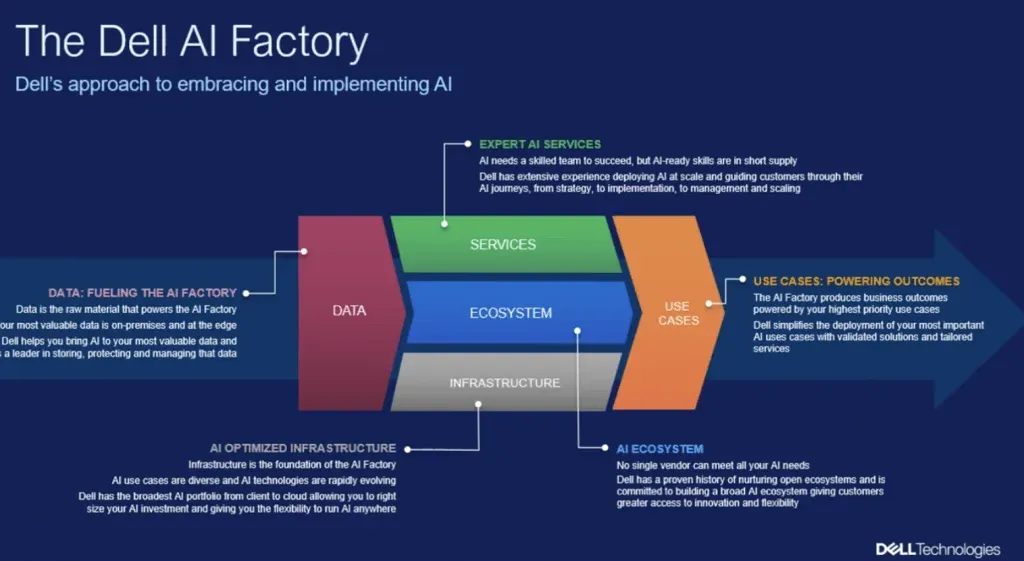

The Dell AI Factory

At the heart of Dell’s AI push is the Dell AI Factory, a full-stack architecture built in partnership with NVIDIA. While it includes high-performance servers like the PowerEdge XE9680 loaded with NVIDIA H100 GPUs, the real innovation lies in how everything works together.

This isn't just a rack of GPU-packed servers. It's a validated, end-to-end stack designed for AI workloads across:

-

Model training and tuning (on-prem or hybrid)

-

Generative AI applications like copilots and summarization

-

Data preparation and pipeline automation

-

Edge inference for low-latency use cases

At its core, the AI Factory combines Dell PowerEdge servers, NVIDIA H100 GPUs, and NVIDIA AI Enterprise software, but it’s wrapped in orchestration layers, observability tooling, and security frameworks—making it deployable, manageable, and repeatable in real business environments.

Smarter Infrastructure, Built-In Intelligence

Dell’s hardware is still the foundation, but it's been transformed by automation and observability. The infrastructure is now intelligent—constantly learning and optimizing itself through tools like CloudIQ and APEX Navigator.

These platforms deliver AI-powered telemetry, anomaly detection, workload balancing, and predictive alerts. What used to be static, manual infrastructure has evolved into dynamic, self-aware systems that reduce human overhead and increase operational resilience.

And if you're deploying in hybrid or multicloud environments, Dell’s infrastructure doesn’t force a rip-and-replace model. It integrates smoothly into existing ecosystems—supporting OpenShift, Tanzu, Kubernetes, and major cloud providers like Azure and AWS.

Scaling AI Beyond the Data Center

AI isn’t only happening in server rooms anymore. With the rise of real-time applications—like autonomous machinery, smart retail, and predictive maintenance—AI must extend to the edge. Dell’s answer to this challenge is NativeEdge, its edge platform built specifically for running containerized AI models outside the data center.

Using NativeEdge, enterprises can deploy, monitor, and manage AI workloads from a central platform, no matter where the compute sits. This is crucial for industries where latency, connectivity, and data privacy are non-negotiable.

By combining edge capability with scalable data infrastructure (like Dell PowerScale and ECS), Dell ensures that data gravity isn’t a roadblock, but an opportunity to innovate closer to where data is created.

Security & Trust Are Core to the Ecosystem

AI introduces new risks: model leakage, training data exposure, inferencing vulnerabilities. Dell is addressing this with:

-

Project Fort Zero – Dell’s enterprise-ready Zero Trust framework that’s now integrated into AI environments.

-

Secure boot, firmware validation, and encryption at rest/in-flight baked into PowerEdge and storage systems.

-

Role-based access and audit trails built into orchestration and APEX platforms.

You don’t have to bolt on security after the fact—it’s part of the ecosystem.

Dell’s transition from hardware manufacturer to AI ecosystem builder is more than a strategic pivot—it’s a reflection of where enterprise technology is heading. AI isn’t a single tool or platform. It’s a complex system that requires tight integration across infrastructure, software, and operations.

With its full-stack AI vision, Dell is positioning itself to lead in a world where AI isn’t an add-on—it’s the foundation of how we build, work, and innovate.